We now live in a world permeated by computers. From phones to watches, home thermostats to coffee makers, and even ball-point pens, more and more of the gadgets we interact with on a daily basis are general-purpose computational devices in disguise. These “smart” devices differ from ordinary ones in that they are programmable and can therefore respond to users’ specific needs and demands.

For example, I recently bought the Jawbone Up 24, a rubber bracelet fitness monitor that tracks my daily movement. While the Jawbone is an interesting gadget on its own, it also works with a cross-device interface I can program. So now, every time the Up detects that I’ve met my daily goal for number of steps walked, a festive-looking lava lamp in my living room lights up. It’s a small event, but it’s my own personalized celebration to keep me motivated. It’s also an example of the sort of thing devices can do for you if you ask nicely.

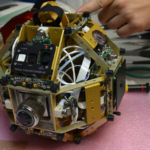

For years, computer scientists have been envisioning a world without boundaries between cyberspace and the physical spaces people occupy, where programmable devices are integrated into larger systems, like smart houses, to make the user’s entire environment programmable.1 This joining of computers and objects is sometimes referred to as the Internet of Things or the Programmable World.(a)

What will this programmable world look like? And how will we get there?

The Rise of Responsive Devices

At the simplest level, a programmable device is one that can take on variety of behaviors at a user’s command without requiring physical changes. For example, a programmable synthesizer can sound like a number of different instruments depending on the player’s preference, while a traditional piano can sound only the way it was physically designed to sound.

Manufacturers of many common household products are attempting to set themselves apart from the pack by introducing programmability and automation to their products. The humble vacuum cleaner is a star example.(b) iRobot, a company that developed mobile robots for military settings and space exploration, hit it big when it outfitted a small wheeled vacuum cleaner with sensors allowing it to navigate a household autonomously, sucking up the dirt in its path. The result was the Roomba, the most popular of the 10 million home robots iRobot has sold.

To better understand the range of programmable devices currently available to consumers, I gathered and analyzed a list of roughly 500 devices in the Appliances department of an online retailer that include “programmable” in their title.3 The majority of these devices focus on relatively simple tasks: switching on and off at times predetermined by the user (for example, a coffeemaker starting at 7am each weekday morning), measuring environmental conditions and regulating output in response (a thermostat turning the heat on at a specified temperature), or conducting a sequence of actions set by the user (the cycles of mixing and kneading for a bread maker). Some devices synthesize several of these abilities. The Roomba, for example, can be set to clean in certain patterns, at predefined times, and in specific rooms of the house.

With the increased availability of lower-cost smart devices, products that tailor their behavior to consumers’ needs are now accessible to a larger population. But products are only meaningfully programmable in so far as end users – the customers who want to communicate their preferences to these devices – can program them. The evolution of the technology itself will be useless without the evolution of the user’s experience.

Making Products Truly Programmable

The transition to a programmable world only truly begins when control of devices becomes accessible to those with little technical knowledge. Programmable devices are supposed to make our lives easier, but this is only the case if getting a device to perform a task is less challenging and time-consuming than completing the task ourselves. Well-designed devices must have accessible, intuitive interfaces that make it possible for users to communicate their intentions without having to learn any sophisticated programming languages.

This communication was once done through buttons and knobs, but nowadays often happens via remote controls, touch-panel displays, or smartphone apps. And while a good user interface simplifies programming, it also carries with it the cost of learning how to use the interface itself.(c) If users need to learn different interfaces for their vacuums, their locks, their sprinklers, their lights, and their coffeemakers, it’s tough to say that their lives have been made any easier. In that case, all that the gadgetry has done is replace a user’s physical tasks with digital ones.

One promising solution is to combine the interfaces for several products in the same system. The advent of the smartphone has been a boon for programmable devices because it offers a standardized, portable platform for users to interact with their devices. Even something as seemingly simple as a light bulb can now be made to change color and brightness through an iPhone app.(d) (Perhaps we should revise the old joke: How many people does it take to change a light bulb’s color? One, but she needs to download the free app and configure it first.)

However, the smartphone doesn’t completely solve the problem if the interface for each device is different: end users still have too many remotes, they’re all just contained in a phone-sized drawer. Some manufacturers have moved beyond simply putting different apps on the same screen to building integrated platforms that can manage multiple devices. Belkin’s WeMo Home Automation line, for example, allows users to control a range of WeMo products – from heaters to lights to electronics – wirelessly through one app.

Another promising step forward is If-This-Then-That (IFTTT), one of the most successful and advanced attempts at a simple, holistic end-user programming system. IFTTT lets users coordinate over 100 web services, such as Facebook and the New York Times, through trigger-action programming. Users issue commands in the form of “if-then” statements called “recipes,” and the program executes each action automatically when the trigger condition is met.

For example, users can connect the Weather Channel with Gmail so that if there is rain in tomorrow’s forecast, then they will receive an email with a weather update. It was IFTTT that I used to connect my Jawbone fitness monitor to my lava lamp, giving me the little light celebration I mentioned earlier. This event-based programming, in which a machine carries out an action only after a certain event occurs, is intuitive for non-programmers.5

My research indicates that robust trigger-action systems may be the best approach for managing a user-friendly programmable world.(e) In a recent survey, my collaborators and I asked people what tasks they would want a smart home to accomplish. The majority (62%) of these tasks could be achieved using trigger-action programming, primarily with a single trigger and single action, creating formulas like “notify me when my pet gets out of the back yard” or “start brewing coffee 15 minutes before my alarm.”8

My collaborators and I then designed two interfaces – a simpler one for single-trigger, single-action formulas and one that could accommodate multiple triggers and actions – and tested if people could use them. Most participants could, and those who had no programming experience used them with as much ease as those who did. Our study demonstrates that the programmable world can incorporate advanced devices without requiring users to learn complex systems for interacting with them.

Helping Devices Understand Our Desires

Once we can seamlessly connect household devices to their users and to one another, the next challenge will be to create systems that allow for more precise communication between machines and humans. In a perfectly programmable world, our device systems would follow the same rules we do, rules that are much more complex and abstract than programmable devices can currently handle.

Most human behavior is triggered by a number of considerations and sensory inputs. A programmable device can follow a rule like, “At 3pm, lower the blinds,” or even, “If the sun hits my computer screen, then lower the blinds.” However, our human rule for this situation is probably more abstract: “If I need to see the screen and I can’t, figure out why and then do something to make the screen visible.”

Accommodating this more complex kind of behavior would require a type of programming further out on the horizon, one that allows a machine to understand what humans mean rather than simply what they say. Instead of inflexible edicts like “Turn the lights on at 6:15pm,” it would be nicer to tell programmable devices, “Turn the lights on when I get home,” or perhaps even more abstractly, “Turn the lights on when I need to see.”

Events like “when I get home” and “when I need to see” are hard to define explicitly, and identifying them requires the integration of multiple kinds of sensory information. However, they can be inferred from context given the right definitions and environmental data. Techniques from machine learning(f) could allow systems to learn people’s often complex preferences, map the relationship between these preferences and desired actions and outcomes, mediate conflicts between competing triggers and actions, and coordinate activities that affect multiple people with different preferences.9 The Nest thermostat, which learns users’ daily schedules and tries to predict their desired temperature and humidity levels, is an exciting development in this direction.(g)Looking Forward to the Programmable World

In the future, we are likely to be surrounded by programmable devices and systems that detect our wants and needs and respond accordingly. These machines will keep houses clean and yards maintained to our specifications; modulate light, temperature, and humidity to suit our preferences; reorder household products when needed; allow us to control our locks and security systems from afar; and engage in a host of other tasks tailored to our desires.

The current explosion of programmable devices is just the beginning. The ultimate goal is integrated systems that can detect, learn, and respond to the complex and abstract preferences of human users. Comprehensive paradigms are emerging that will imbue our smart homes and workplaces with a harmonious blend of flexibility and order, personality and technology. The world will be truly programmable once everyone, not just computer scientists or engineers, can control it.

Endnotes

- For research on creating user-friendly interfaces for smart homes and other programmable environments, see: Yngve Dahl and Reidar-Martin Svendsen (2011) “End-user composition interfaces for smart environments: A preliminary study of usability factors,” Proceedings of Design, User Experience, and Usability. Theory, Methods, Tools and Practice: 118-127. Evgenia Litvinova and Petra Vuorimaa (2012) “Engaging end users in real smart space programming,” Proceedings of the 2012 ACM Conference on Ubiquitous Computing: 1090-1095. Mark W. Newman, Ame Elliott, and Trevor F. Smith (2008) “Providing an integrated user experience of networked media, devices, and services through end-user composition,” Proceedings of the 6th International Conference on Pervasive Computing: 213-227.

- Bill Wasik (2013) “Welcome to the Programmable World,” Wired, May 14.

- Michael Littman (2013) “Survey of Programmable Appliances,” Scratchable Devices Blog: End-user programming for household devices, Scratchable Devices, Rutgers University.

- Scott Davidoff, Min Kyung Lee, Charles Yiu, John Zimmerman, and Anind K Day (2006) “Principles of smart home control,” Proceedings of the 2006 ACM Conference on Ubiquitous Computing, 4206: 19-34.

- John F. Pane, Chotirat “Ann” Ratanamahatana, and Brad A. Myers (2001) “Studying the language and structure in non-programmers’ solutions to programming problems,” International Journal of Human-Computer Studies, 54(2): 237-264.

- Anind K. Dey, Timothy Sohn, Sara Streng, and Justin Kodama (2006) “iCAP: Interactive prototyping of context-aware applications,” Proceedings of the 4th International Conference on Pervasive Computing, 3968: 254-271.

- Khai N. Truong, Elaine M. Huang, and Gregory D. Abowd (2004) “CAMP: A magnetic poetry interface for end-user programming of capture applications for the home,” Proceedings of the 2004 ACM Conference on Ubiquitous Computing, 3205: 143-160.

- Blase Ur, Elyse McManus, Melwyn Pak Yong Ho, and Michael L. Littman (2014) “Practical Trigger-Action Programming in the Smart Home,” Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems (CHI).

- Scott Davidoff, Min Kyung Lee, John Zimmerman, and Anind Dey (2006) “Socially-aware requirements for a smart home,” Proceedings of the International Symposium on Intelligent Environments.

Sidenotes

- (a) In 2012, Wired editor Bill Wasik asserted that society is undergoing a transition into a “programmable world.”2 This shift will consist of three phases: more and more devices will get onto the same network, these devices will work together to automate tasks, and finally, they will be organized into a programmable software platform that can run apps. Futurists have also been heralding the “Internet of Things” – a system of sensor technologies, first predicted by technologist Kevin Ashton in 1999, that will allow objects like cars and appliances to be connected to and controlled by the internet.

- (b) Many programmable devices are not as well established as the Roomba but certainly have the potential to be useful. Exciting examples include Lockitron, a simple device that allows you to lock and unlock your door over the internet, and Hydrawise, an automated sprinkler system that reacts to local weather conditions. Other devices may turn out to be passing fads, such as the Toto Neorest, a computerized toilet that self-flushes, self-closes, and even provides a programmable nightlight for the benefit of the prospective, shall I say, end user.

- (c) A number of studies have identified a threshold with machine capacities where users stop feeling like they are controlling the device and start feeling like the device is controlling them.4

- (d) Hue light bulbs can be pre-set to turn on and off according to different users’ sleep patterns, eliminating the need for a light switch. Users can also custom-design the lights’ colors from their computer.

- (e) These systems would be similar to IFTTT but allow for more functions and multiple-trigger, multiple-action formulas. Computer scientists have built an interface called the interactive Context-aware Application Prototype (iCAP) that can handle multiple-trigger, multiple-action commands like “If the phone rings in the kitchen and the music is on in an adjacent room or if Joe is outside and the phone rings in the kitchen then turn up the phone volume.”6 A project at the Georgia Institute of Technology called CAMP (Capture and Access Magnetic Poetry) lets users mix and match words divided into who, what, where, and when categories to issue commands.7

- (f) Machine learning refers to a trial-and-error process by which a machine uses the consequences of its previous decisions as feedback to modify future behaviors.

- (g) Nest, recently purchased by Google, is best known for its thermostat, which can learn to turn the temperature down when nobody is home to save money on electricity bills.